Implementing Deep Reinforcement Learning (DRL)-based Driving Styles for Non-Player Vehicles

DOI:

https://doi.org/10.17083/ijsg.v10i4.638Keywords:

Reinforcement Learning, Automotive Driving, Serious Games, Autonomous Agents, Racing Games, Driving Styles, Decision Making, PPOAbstract

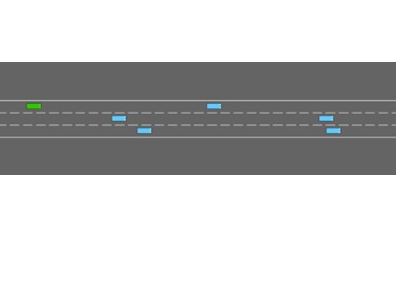

We propose a new, hierarchical architecture for behavioral planning of vehicle models usable as realistic non-player vehicles in serious games related to traffic and driving. These agents, trained with deep reinforcement learning (DRL), decide their motion by taking high-level decisions, such as “keep lane”, “overtake” and “go to rightmost lane”. This is similar to a driver’s high-level reasoning and takes into account the availability of advanced driving assistance systems (ADAS) in current vehicles. Compared to a low-level decision making system, our model performs better both in terms of safety and speed. As a significant advantage, the proposed approach allows to reduce the number of training steps by more than one order of magnitude. This makes the development of new models much more efficient, which is key for implementing vehicles featuring different driving styles. We also demonstrate that, by simply tweaking the reinforcement learning (RL) reward function, it is possible to train agents characterized by different driving behaviors. We also employed the continual learning technique, starting the training procedure of a more specialized agent from a base model. This allowed significantly to reduce the number of training steps while keeping similar vehicular performance figures. However, the characteristics of the specialized agents are deeply influenced by the characteristics of the baseline agent.

Downloads

Published

Issue

Section

License

Copyright (c) 2023 Luca Forneris, Alessandro Pighetti, Luca Lazzaroni, Francesco Bellotti, Alessio Capello, Marianna Cossu, Riccardo Berta

This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License.

IJSG copyright information is provided here.